Apple to Scan iPhone and iCloud Photos for Child Abuse Imagery

| More Apple News for Fans: A Launch of Big Redesign of Its Online Store | |

| Apple Recruits Numerous Vacancies in Vietnam | |

| Apple’s Profits Nearly Doubled In Latest Quarter Despite Pandemic |

|

| Apple has announced it will begin to scan iPhones in the US for child abuse images. Photo: AP |

The new service will turn photos on devices into an unreadable set of hashes -- or complex numbers -- stored on user devices, the company explained at a press conference.

Those numbers will be matched against a database of hashes provided by the National Center for Missing and Exploited Children, CNN reported.

In taking this step, Apple (AAPL) is following some other big tech companies such as Google (GOOG) and Facebook (FB). But it's also trying to strike a balance between safety and privacy, the latter of which Apple has stressed as a central selling point for its devices.

In a post on its website outlining the updates, the company said: "Apple's method ... is designed with user privacy in mind." Apple emphasized that the tool does not "scan" user photos and only images from the database will be included as matches. (This should mean a user's harmless picture of their child in the bathtub will not be flagged.)

Apple also said a device will create a doubly-encrypted "safety voucher" -- a packet of information sent to servers -- that's encoded on photos. Once there are a certain number of flagged safety vouchers, Apple's review team will be alerted. It'll then decrypt the voucher, disable the user's account and alert National Center for Missing and Exploited Children, which can inform law enforcement. Those who think their accounts have been mistakenly flagged can file an appeal to have it reinstated.

|

| This May 21, 2021 photo shows the Apple logo displayed on a Mac Pro desktop computer in New York. Apple is planning to scan US iPhones for images of child abuse, drawing applause from child protection groups but raising concern among security researchers that the system could be misused by governments looking to surveil their citizens. Photo: AP |

Apple's goal is to ensure identical and visually similar images result in the same hash, even if it's been slightly cropped, resized or converted from color to black and white.

The announcement is part of a greater push around child safety from the company. Apple said a new communication tool will also warn users under age 18 when they're about to send or receive a message with an explicit image. The tool, which has to be turned on in Family Sharing, uses on-device machine learning to analyze image attachments and determine if a photo is sexually explicit. Parents with children under the age of 13 can additionally turn on a notification feature in the event that a child is about to send or receive a nude image. Apple said it will not get access to the messages.

That tool will be available as a future software update, according to the company.

Drawing applause

“Apple’s expanded protection for children is a game changer,” John Clark, the president and CEO of the National Center for Missing and Exploited Children, said in a statement. “With so many people using Apple products, these new safety measures have lifesaving potential for children.”

Julia Cordua, the CEO of Thorn, said that Apple’s technology balances “the need for privacy with digital safety for children.” Thorn, a nonprofit founded by Demi Moore and Ashton Kutcher, uses technology to help protect children from sexual abuse by identifying victims and working with tech platforms, AP said.

Nicholas Weaver, a computer security expert and lecturer at the University of California at Berkeley, said on Twitter that he didn’t blame Apple for choosing to risk fighting with oppressive regimes and take a tougher stance on child sexual abuse, according to Forbes.

And upset

|

| iPhone photos are going to be scanned by Apple—something that's polarizing privacy experts. JENS KALAENE/DPA/PICTURE ALLIANCE VIA GETTY IMAGES |

However, the Washington-based nonprofit Center for Democracy and Technology called on Apple to abandon the changes, which it said effectively destroy the company’s guarantee of “end-to-end encryption.” Scanning of messages for sexually explicit content on phones or computers effectively breaks the security, it said.

The organization also questioned Apple’s technology for differentiating between dangerous content and something as tame as art or a meme. Such technologies are notoriously error-prone, CDT said in an emailed statement.

Alec Muffett, a noted encryption expert and former Facebook security staffer, and other encryption experts like Johns Hopkins professor Matt Green and NSA leaker Edward Snowden have also raised the alarm that Apple could now be pressured into looking for other material on people’s devices, if a government demands it.

“How such a feature might be repurposed in an illiberal state is fairly easy to visualize. Apple is performing proactive surveillance on client-purchased devices in order to defend its own interests, but in the name of child protection,” Muffett added. “What will China want them to block?

“It is already a moral earthquake.”

The Electronic Frontier Foundation (EFF) said that the changes effectively meant Apple was introducing a “backdoor” onto user devices. “Apple can explain at length how its technical implementation will preserve privacy and security in its proposed backdoor, but at the end of the day, even a thoroughly documented, carefully thought-out and narrowly scoped backdoor is still a backdoor,” the EFF wrote.

“Apple’s compromise on end-to-end encryption may appease government agencies in the U.S. and abroad, but it is a shocking about-face for users who have relied on the company’s leadership in privacy and security.”/.

| Apple expands Independent Repair Provider programme to Vietnam Apple’s Independent Repair Provider program will soon be available in more than 200 countries, including Vietnam. |

| Foxconn recruits 1,000 workers in Vietnam following its US$270 million project Taiwan-based Hon Hai Precision Industry Co., Ltd. (Foxconn), which is Apple’s major supplier, is recruiting 1,000 workers for assembling electronic parts for its plants in ... |

| IPhone 13: Release date, price, design, specs and everything to know Only a few months after Apple launched its latest flagship phone, people are already turning their attention to the 2021 iPhone (it could be the ... |

Recommended

World

World

Pakistan NCRC report explores emerging child rights issues

World

World

"India has right to defend herself against terror," says German Foreign Minister, endorses Op Sindoor

World

World

‘We stand with India’: Japan, UAE back New Delhi over its global outreach against terror

World

World

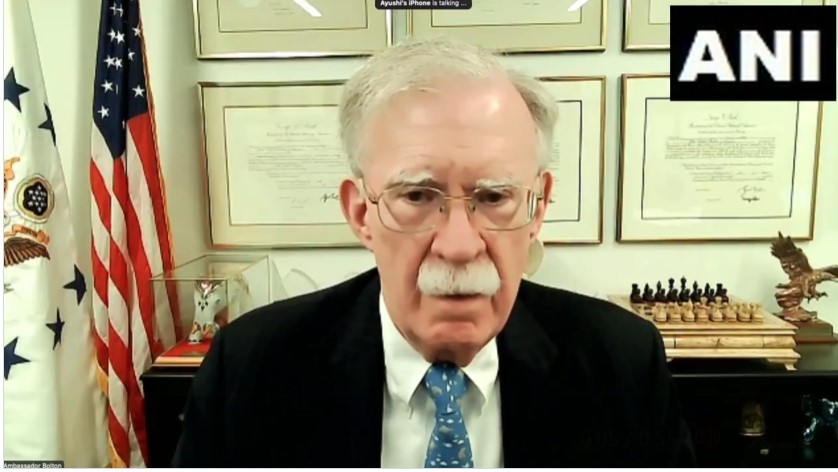

'Action Was Entirely Justifiable': Former US NSA John Bolton Backs India's Right After Pahalgam Attack

World

World

US, China Conclude Trade Talks with Positive Outcome

World

World

Nifty, Sensex jumped more than 2% in opening as India-Pakistan tensions ease

World

World

Easing of US-China Tariffs: Markets React Positively, Experts Remain Cautious

World

World